- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Discovering an efficient method to obtain rapid data, such as within a 1 ms timeframe, from the ctrlX PLC is crucial. This article presents a practical demonstration of acquiring data swiftly and in real-time from the PLC App, ensuring seamless preservation in the InfluxDB for future utilization, without any data loss.

Prerequisites

- ctrlX PLC App

- ctrlX InfluxDB App

- ctrlX Node-RED App

- Util library in ctrlX PLC Engineering

PLC code

The PLC writes data into an internal buffer array. If this array reached the maximum number of samples, it will be copied into the external buffer array and the buffer counter increments. From outside the PLC e.g. in Node-RED we then can observe the buffer index value. If it changes, it means that a new buffer array is ready. We then can read and post process it.

The external buffer array are in fact two arrays. One for the value and the other for the timestamp.

With the following variables, we can control the behavior of the data sampling:

- bStart set it to true to start the data acquisition

- num_samples the number of data points to write into the buffer array

- input_value the value of the data that will be recorded

- trigger the trigger is observed and on change the value will be stored into the buffer array

- sample_interval this acts as a multiplier, e.g. if we want the data every 10 ms we can set it to 10.

1. Create the Function Block "daq"

Declaration:

FUNCTION_BLOCK daq

VAR_INPUT

num_samples : UINT := 1000;

input_value: UINT;

trigger: UDINT;

END_VAR

VAR_OUTPUT

buffer_id : INT := 0;

buffer_value : ARRAY [0..10000] OF UINT;

buffer_timestamp : ARRAY [0..10000] OF ULINT;

END_VAR

{attribute 'hide'}

VAR

run : BOOL := TRUE;

num_values : INT := 16;

i : INT;

index : INT := 0;

sample : INT := 0;

buffer_internal_value : ARRAY [0..10000] OF UINT;

buffer_internal_timestamp : ARRAY [0..10000] OF ULINT;

rtc : ULINT;;

trigger_old: UDINT := 0;

END_VAR

Implementation:

Util.SysTimeRtcHighResGet(pTimestamp:= rtc);

IF trigger <> trigger_old THEN

buffer_internal_value[sample] := input_value;

buffer_internal_timestamp[sample] := rtc;

sample := sample + 1;

END_IF

IF sample >= num_samples THEN

sample := 0;

Util.SysMemCpy(pDest:=ADR(buffer_value), pSrc:=ADR(buffer_internal_value), udiCount:= num_samples * SIZEOF(input_value));

Util.SysMemCpy(pDest:=ADR(buffer_timestamp), pSrc:=ADR(buffer_internal_timestamp), udiCount:= num_samples * SIZEOF(rtc));

buffer_id := buffer_id + 1;

END_IF

trigger_old:=trigger;2. Create the GVL

VAR_GLOBAL

{attribute 'symbol' := 'read'} i: UINT;

{attribute 'symbol' := 'read'} trigger: UINT := 0;

{attribute 'symbol' := 'readwrite'} bStart: BOOL;

{attribute 'symbol' := 'readwrite'} sample_interval: UINT := 10;

{attribute 'symbol' := 'read'} buffer_id: INT;

{attribute 'symbol' := 'read'} num_samples: UINT := 5000;

{attribute 'symbol' := 'read'} buffer_value: ARRAY [0..10000] OF UINT;

{attribute 'symbol' := 'read'} buffer_timestamp: ARRAY [0..10000] OF ULINT;

END_VAR

3. Create the fast task

Declaration:

PROGRAM fast_task

VAR

daq: daq;

END_VAR

Implementation:

IF bStart THEN

IF i MOD sample_interval = 0 THEN

trigger := trigger + 1;

END_IF

i := i+1;

END_IF

daq(

num_samples:= num_samples,

input_value:= i,

trigger:= trigger,

buffer_id=> buffer_id,

buffer_value=> buffer_value,

buffer_timestamp=> buffer_timestamp

);

Configure the task so that it will run every millisecond.

Node-RED Flow

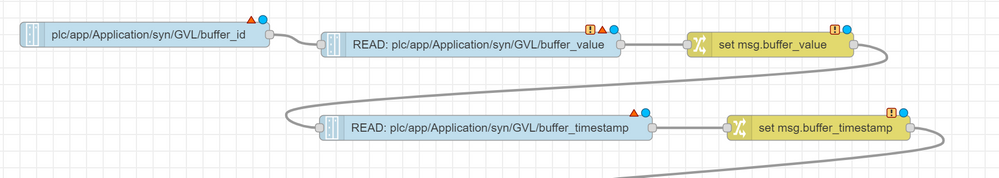

You should now create a flow which observes the buffer_id variable. On change it then should get the buffer_value and the buffer_timestamp arrays and store it to msg.buffer_value and msg.buffer_timestamp like shown in the image:

The function node to write the data into the database:

class fieldObject {

constructor (time, value) {

this.time = time;

this.value = value;

}

}

msg.payload = [];

const tagObject = {}

for (let i = 0; i < msg.buffer_value.length; i++) {

msg.payload.push([new fieldObject(msg.buffer_timestamp[i], msg.buffer_value[i]), tagObject])

}

return msg;

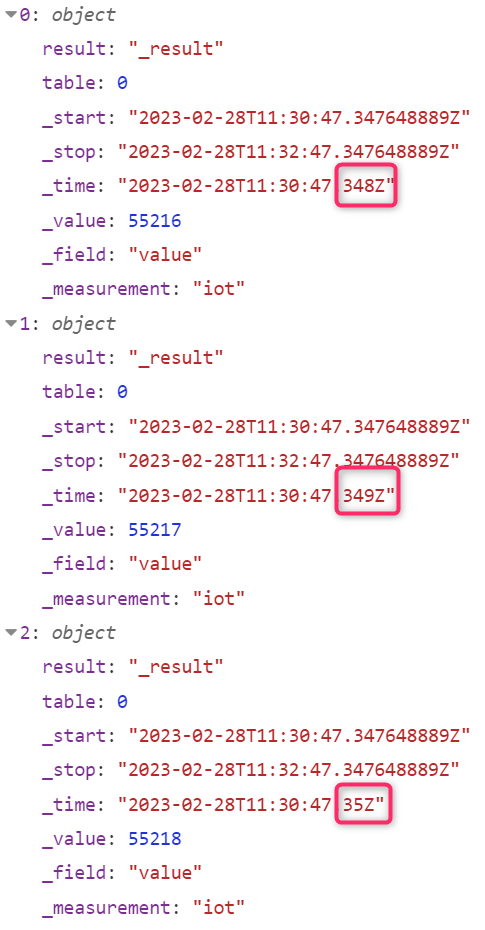

If everything went according to plan, the raw data should be visible in InfluxDB with 1 ms distance between each data point.

Node-RED Debug

Node-RED DebugImporting the Project

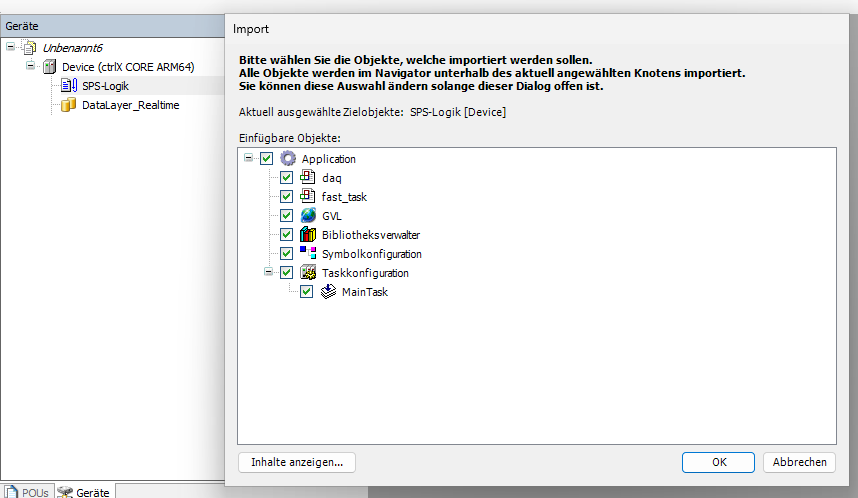

You need to first select "SPS-Logic" in your project and then "Project" -> "import".

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.