- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

- Introduction

- Overview

- Prerequisites

- Build ONNX Model

- Build snap to run ONNX

- Modify main.py

- Modify snapcraft.yaml

- Build and deploy snap

Introduction

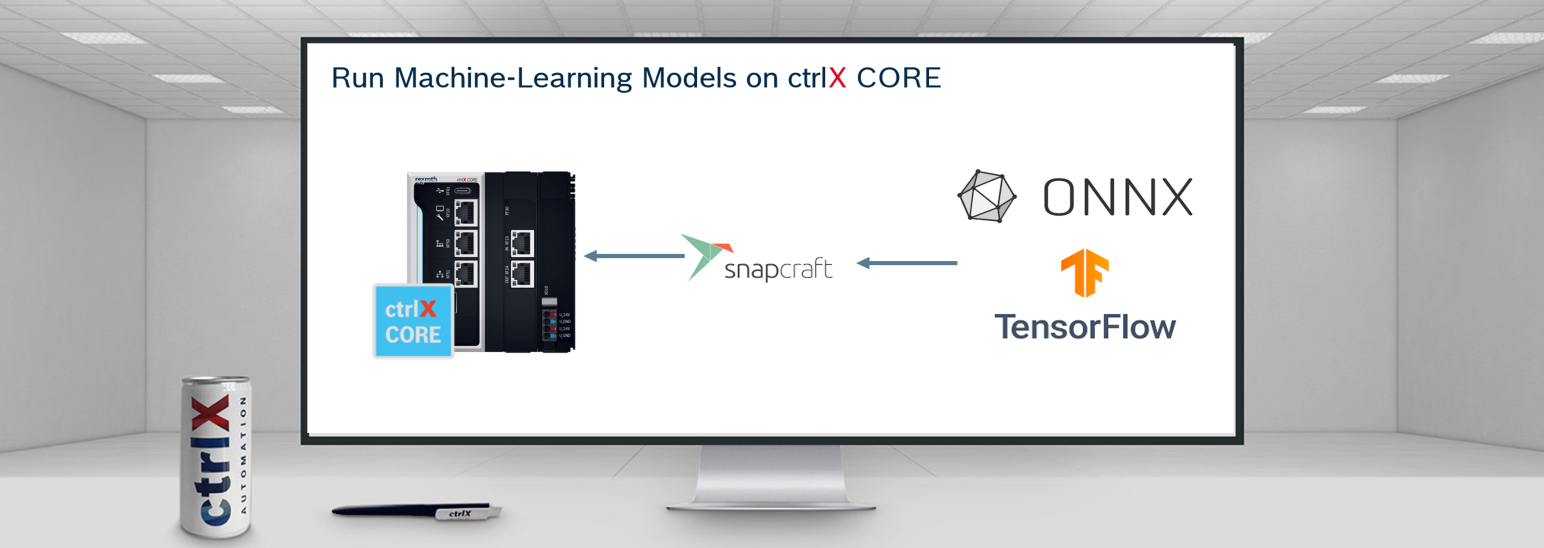

The ctrlX CORE, developed by Bosch Rexroth, is a versatile industrial control platform that enables running ONNX models for enhanced automation and intelligence. By ensuring compatibility between the ONNX model and the target hardware, converting it using tools like the ONNX Runtime, deploying it on the ctrlX CORE, and integrating it into industrial automation workflows using the SDK of ctrlX AUTOMATION, users can achieve advanced levels of automation and intelligence, optimizing performance and making data-driven decisions within their industrial environments.

Overview

Prerequisites

- SDK of ctrlX AUTOMATION (-version 1.20.0)

- ctrlX AUTOMATION

- ctrlX CORE (firmware > version 1.20)

- ctrlX WORKS to install virtualCORE (> version 1.20)

- Setup ctrlX WORKS app build environment

- Visual Studio Code

Build ONNX Model

First a sample random forest machine-learning model model was built using sklearn library and with help of skl2onnx lib. I have saved the model as .onnx format. which I have used to build example snap below.

Model takes two input parameters and output the validation, whether product belongs to good or bad quality.

-> So train the model depending on your use-case and follow the steps below to build snap for ctrlX CORE.

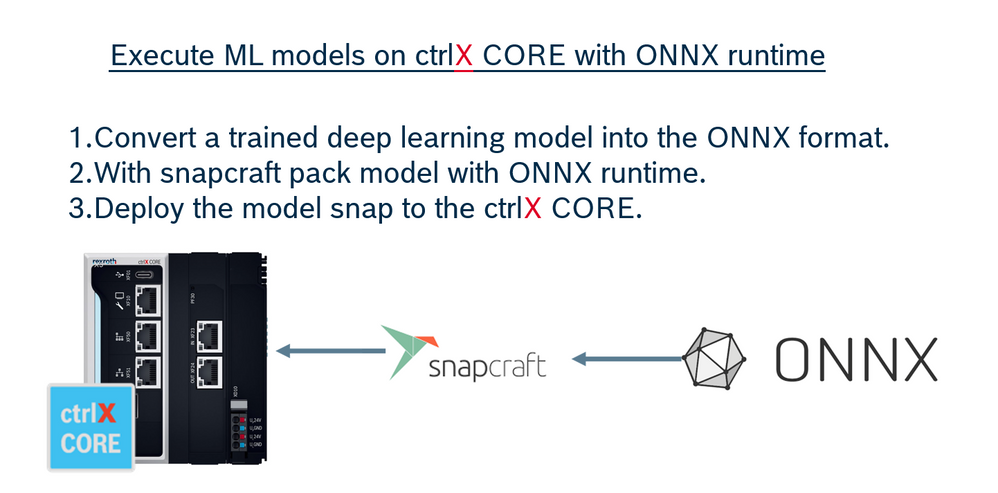

Build snap to run ONNX

There are many sample development examples in the SDK of ctrlX AUTOMATION but in this case, I have used "ctrlx-automation-sdk/samples-python/datalayer.provider". Open this folder inside the app build environment.

Changes need to be made at:

- main.py

- setup.py

- requirements.txt

- snap/snapcraft.yaml

But making any changes create a new folder inside this directory and name it model. Move your ONNX model to this folder.

Modify main.py

After deployment of the snap, this main.py file will generate datalayer nodes. It will read the data from datalayer nodes and run them through a machine learning model and the output will be written in datalayer.

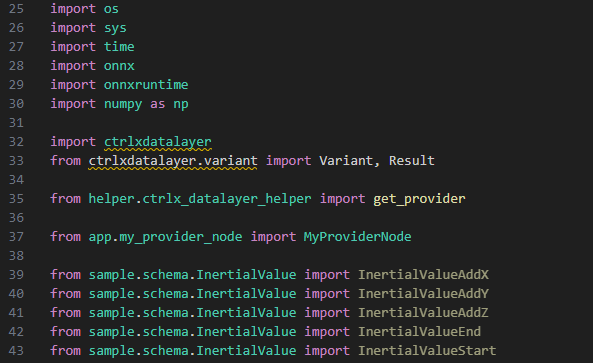

At the top import the libraries, which are important to run your model and manipulate data. If these libraries could not be found then respectively install them in the terminal with the pip command.

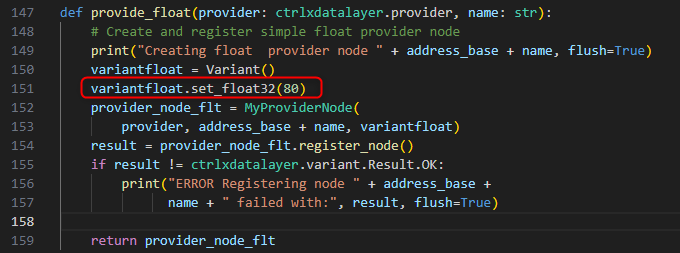

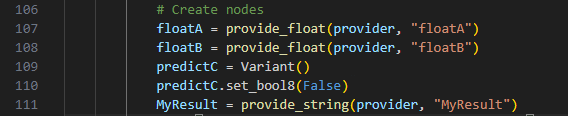

Before doing anything with the model. First, we have to define the data types that we need for input and output. Which are, later on, can be visible in datalayer nodes of the ctrlX CORE.

To define this data-type provider code will look like in the below image.

At the run time function will call the provider and (line 150) create a variant and (line 151) set it to type float with an initial value (from line 152-158). It will try to register the node and handles the error, if something went wrong at the end it will return the node.

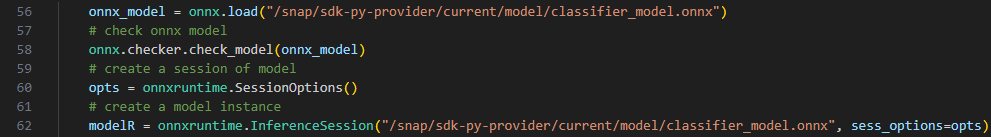

After defining the main function load the ONNX model and start the instance of a model as shown in the picture below.

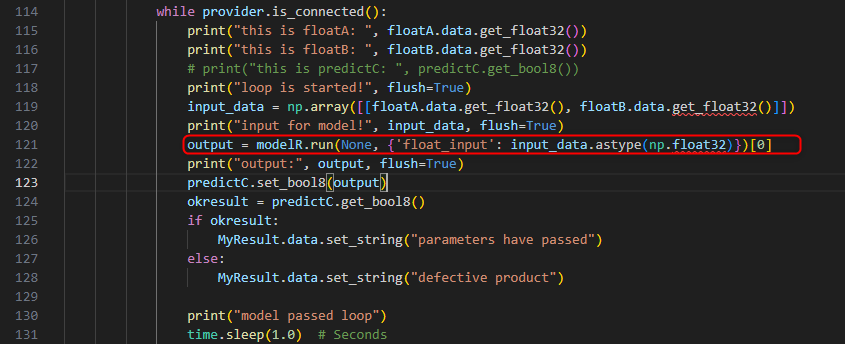

In order to make continues the execution of the ONNX model, while loop needs to be defined as shown below.

Depending on the complexity of the model you might have to add or remove Python lines.

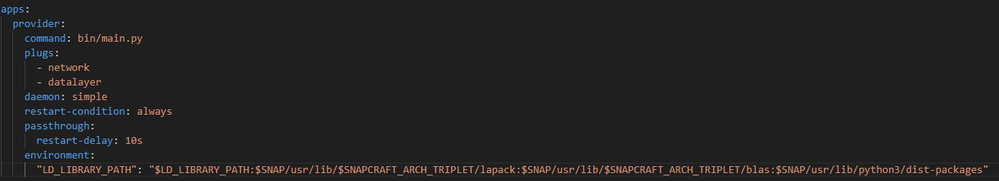

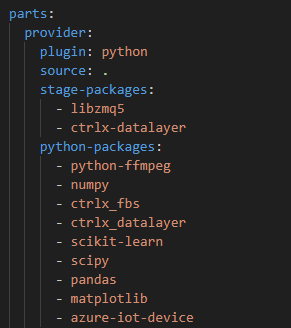

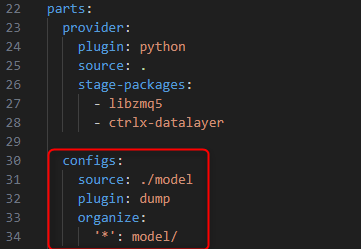

Modify snapcraft.yaml

The snapcraft.yaml file is necessary as it serves as a configuration file for defining the build process, required dependencies, and other essential metadata for building the snap package. It provides a declarative way to specify how the application should be packaged and distributed as a snap.

Inside the "parts": block we have to add the "configs:" block in order to pack the model folder for ONNX. If you have multiple folders for a different model, you have to define it inside the configs block.

In the apps section, modification is necessary for the environment, as shown in the below picture, only if you are building a snap for the real core. for the virtual core, it is not necessary.

at Parts->Provider->python-packages: add required libs, which are imported at run time.

Build and deploy snap

For virtual core "Terminal->Run build task..->build-snap-amd64"

For real core: "Terminal->Run build task..->build-snap-arm64" (for real core, depending on dependencies you might have to build snap on aws-arm instances, Raspberry-Pi, or similar arm x64 hardware).

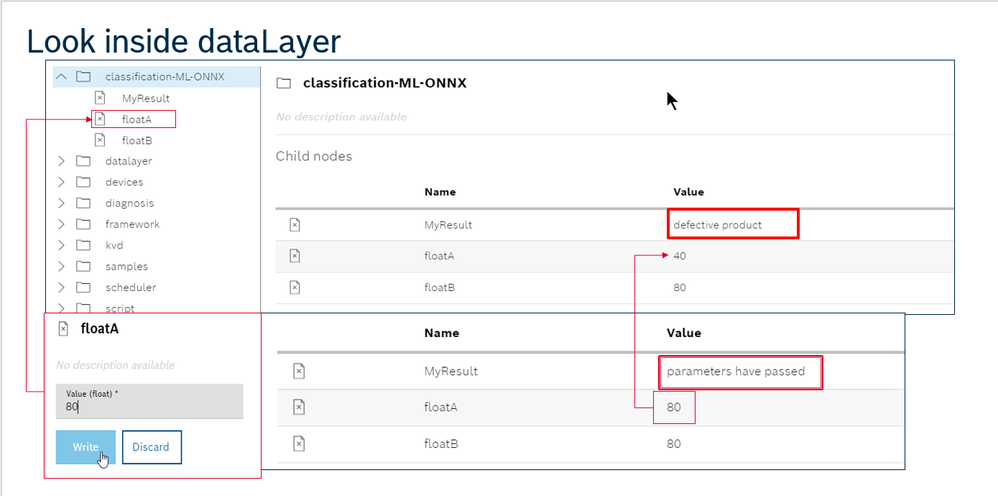

After installing the snap on the CORE, it will start running the model every second. And it will look something like the picture below.

Thank you for taking the time to read this article. I hope you found it informative and enjoyable. If you have any questions, comments or encounter any unusual problems with the project, feel free to leave them in the comments section below. I would love to hear from you and continue the conversation. Your feedback is always appreciated!